I welcome your thoughts on this post, but please read through to the end before commenting. Also, you’ll find the related code (in R) at the end. For those new to this blog, you may be taken aback (though hopefully not bored or shocked!) by how I expose my full process and reasoning. This is intentional and, I strongly believe, much more honest than presenting results without reference to how many different approaches were taken, or how many models were fit, before everything got tidied up into one neat, definitive finding.

Fast summaries

TL;DR (scientific version): Based solely on year-over-year changes in surface temperatures, the net increase since 1881 is fully explainable as a non-independent random walk with no trend.

TL;DR (simple version): Statistician does a test, fails to find evidence of global warming.

Introduction and definitions

As so often happens to terms which have entered the political debate, “global warming” has become infused with additional meanings and implications that go well beyond the literal statement: “the earth is getting warmer.” Anytime someone begins a discussion of global warming (henceforth GW) without a precise definition of what they mean, you should assume their thinking is muddled or their goal is to bamboozle. Here’s my own breakdown of GW into nine related claims:

- The earth has been getting warmer.

- This warming is part of a long term (secular) trend.

- Warming will be extreme enough to radically change the earth’s environment.

- The changes will be, on balance, highly negative.

- The most significant cause of this change is carbon emissions from human beings.

- Human beings have the ability to significantly reverse this trend.

- Massive, multilateral cuts to emissions are a realistic possibility.

- Such massive cuts are unlikely to cause unintended consequences more severe than the warming itself.

- Emissions cuts are better than alternative strategies, including technological fixes (i.e. iron fertilization), or waiting until scientific advances make better technological fixes likely.

Note that not all proponents of GW believe all nine of these assertions.

The data and the test (for GW1)

The only claims I’m going to evaluate are GW1 and GW2. For data, I’m using surface temperature information from NASA. I’m only considering the yearly average temperature, computed by finding the average of four seasons as listed in the data. The first full year of (seasonal) data is 1881, the last year is 2011 (for this data, years begin in December and end in November).

According to NASA’s data, in 1881 the average yearly surface temperature was 13.76°C. Last year the same average was 14.52°C, or 0.76°C higher (standard deviation on the yearly changes is 0.11°C). None of the most recent ten years have been colder than any of the first ten years. Taking the data at face value (i.e. ignoring claims that it hasn’t been properly adjusted for urban heat islands or that it has been manipulated), the evidence for GW1 is indisputable: The earth has been getting warmer.

Usually, though, what people mean by GW is more than just GW; they mean GW2 as well, since without GW2 none of the other claims are tenable, and the entire discussion might be reduced to a conversation like this:

“I looked up the temperature record this afternoon, and noticed that the earth is now three quarters of a degree warmer than it was in the time of my great great great grandfather.”

“Why, I do believe you are correct, and wasn’t he the one who assassinated James A. Garfield?”

“No, no, no. He’s the one who forced Sitting Bull to surrender in Saskatchewan.”

Testing GW2

Do the data compel us to view GW as part of a trend and not just background noise? To evaluate this claim, I’ll be taking a standard hypothesis testing approach, starting with the null hypothesis that year-over-year (YoY) temperature changes represent an undirected random walk. Under this hypothesis, the YoY changes are modeled as a independent draws from a distribution with mean zero. The final temperature represents the sum of 130 of these YoY changes. To obtain my sampling distribution, I’ve calculated the 130 YoY changes in the data, then subtracted the mean from each one. This way, I’m left with a distribution with the same variance as in the original data. YoY jumps in temperature will be just as spread apart as before, but with the whole distribution shifted over until its expected value becomes zero. Note that I’m not assuming a theoretical distributional form (eg Normality), all of the data I’m working with is empirical.

My test will be to see if, by sampling 130 times (with replacement!) from this distribution of mean zero, we can nonetheless replicate a net change in global temperatures that’s just as extreme as the one in the original data. Specifically, our p-value will be the fraction of times our Monte Carlo simulation yields a temperature change of greater than 0.76°C or less than -0.76°C. Note that mathematically, this is the same test as drawing from the original data, unaltered, then checking how often the sum of changes resulted in a net temperature change of less than 0 or more than 1.52°C.

I have not set a “critical” p-value in advance for rejecting the null hypothesis, as I find this approach to be severely limiting and just as damaging to science as J-Lo is to film. Instead, I’ll comment on the implied strength of the evidence in qualitative terms.

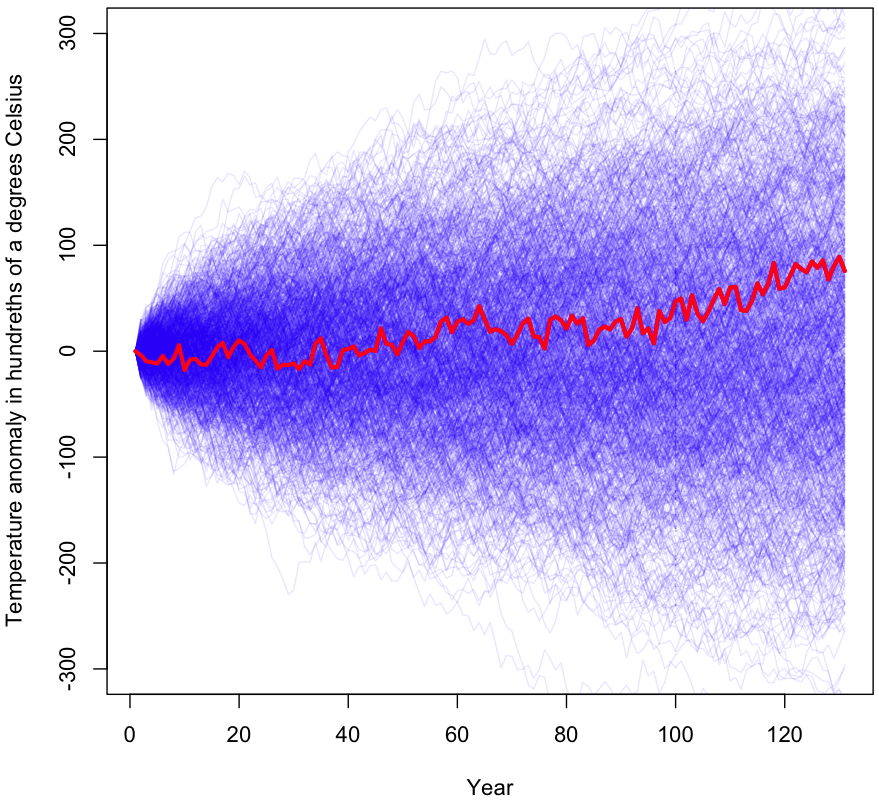

Initial results

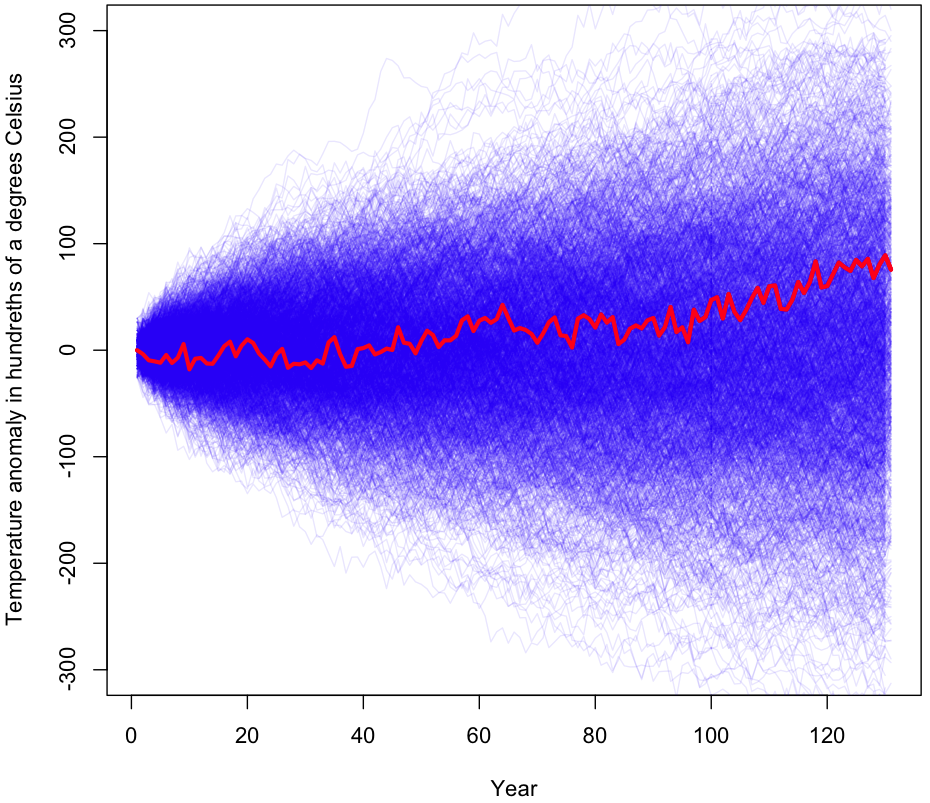

The initial results are shown graphically at the beginning of this post (I’ll wait while you scroll back up). As you can see, a large percentage of the samples gave a more extreme temperature change than what was actually observed (shown in red). During the 1000 trials visualized, 56% of the time the results were more extreme than the original data after 130 years worth of changes. I ran the simulation again with millions of trials (turn off plotting if you’re going to try this!); the true p-value for this experiment is approximately 0.55.

For those unfamiliar with how p-values work, this means that, assuming temperature changes are randomly plucked out of a bundle of numbers centered at zero (ie no trend exists), we would still see equally dramatic changes in temperature 55% of the time. Under even the most generous interpretation of the p-value, we have no reason to reject the null hypothesis. In other words, this test finds zero evidence of a global warming trend.

Testing assumptions Part 1

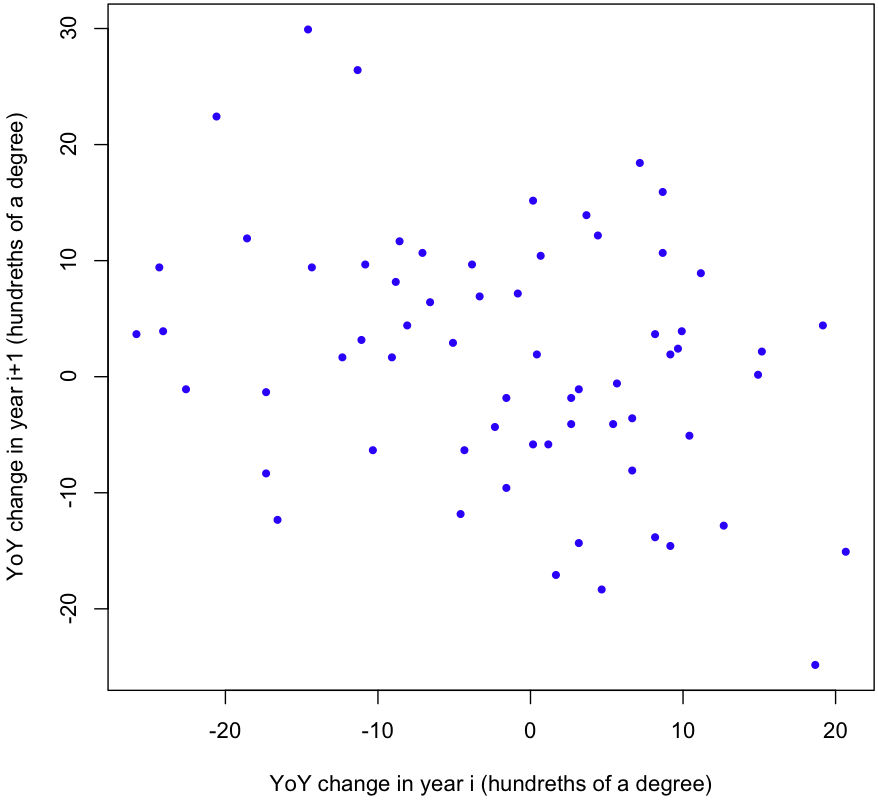

But wait! We still haven’t tested our assumptions. First, are the YoY changes independent? Here’s a scatterplot showing the change in temperature one year versus the change in temperature the next year:

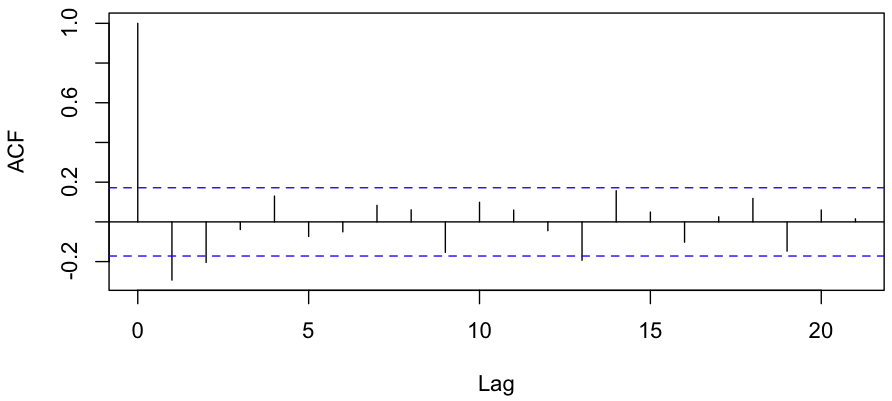

Looks like there’s a negative correlation. A quick linear regression gives a p-value of 0.00846; it’s highly unlikely that the correlation we see (-0.32) is mere chance. One more test worth running is the ACF, or the Autocorrelation function. Here’s the plot R gives us:

Evidence for a negative correlation between consecutive YoY changes is very strong, and there’s some evidence for a negative correlation between YoY changes which are 2 years apart as well.

Before I explain how to incorporate this information into a revised Monte Carlo simulation, what does a negative correlation mean in this context? It tells us that if the earth’s temperature rises by more than average in one year, it’s likely to fall (or rise less than average) the following year, and vice versa. The bigger the jump one way, the larger the jump the other way next year (note this is not a case of regression to the mean; these are changes in temperature, not absolute temperatures. Update: This interpretation depends on your assumptions. Specifically, if you begin by assuming a trend exists, you could see this as regression to the mean. Note, however, that if you start with noise, then draw a moving average, this will induce regression to the mean along your “trendline”). If anything, this is evidence that the earth has some kind of built in balancing mechanism for global temperature changes, but as a non-climatologist all I can say is that the data are compatible with such a mechanism; I have no idea if this makes sense physically.

Correcting for correlation

What effect will factoring in this negative correlation have on our simulation? My initial guess is that it will cause the total temperature change after 130 years to be much smaller than under the pure random walk model, since changes one year are likely to be balanced out by changes next year in the opposite direction. This would, in turn, suggest that the observed 0.76°C change over the past 130 years is much less likely to happen without a trend.

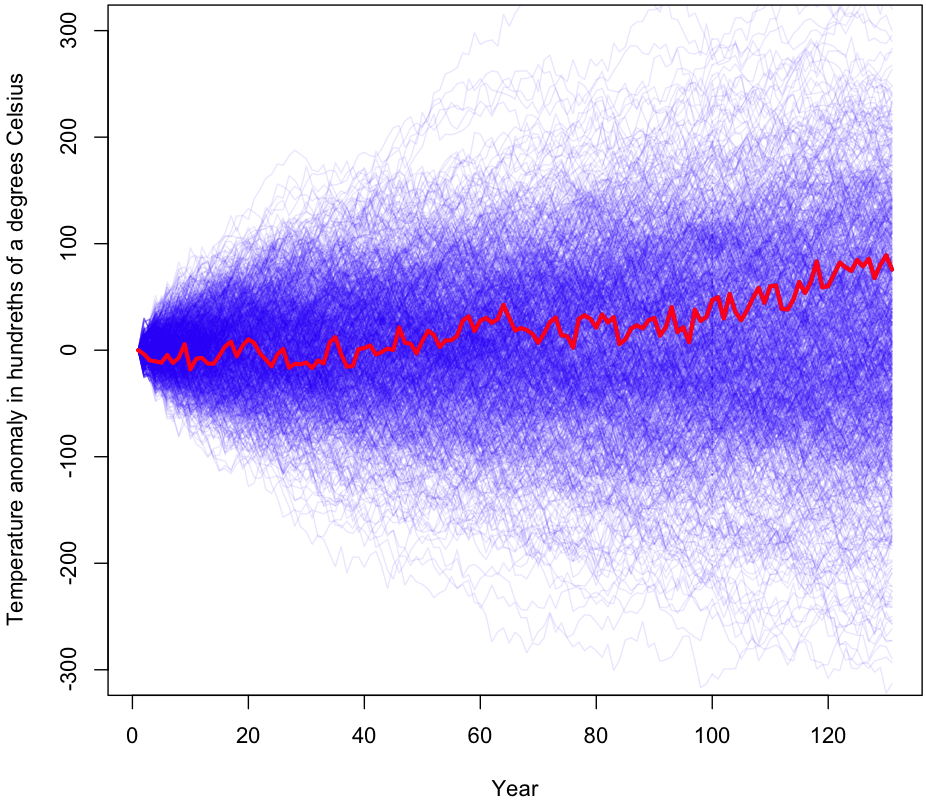

The most straightforward way to incorporate this correlation into our simulation is to sample YoY changes in 2-year increments. Instead of 130 individual changes, we take 65 changes from our set of centered changes, then for each sample we look at that year’s changes and the year that immediately follows it. Here’s what the plot looks like for 1000 trials.

After doing 100,000 trials with 2 year increments, we get a p-value of 0.48. Not much change, and still far from being significant. Sampling 3 years at a time brings our p-value down to 0.39. Note that as we grab longer and longer consecutive chains at once, the p-value has to approach 0 (asymptotically) because we are more and more likely to end up with the original 130 year sequence of (centered) changes, or a sequence which is very similar. For example, increasing our chain from one YoY change to three reduces the number of samplings from 130130 to approximately 4343 – still a huge number, but many orders of magnitude less (Fun problem: calculate exactly how many fewer orders of magnitude. Hint: If it takes you more than a few minutes, you’re doing it wrong).

Correcting for correlation Part 2 (A better way?)

To be more certain of the results, I ran the simulation in a second way. First I sampled 130 of the changes at random, then I threw out any samplings where the correlation coefficient was greater than -0.32. This left me with the subset of random samplings whose coefficients were less than -0.32. I then tested these samplings to see the fraction that gave results as extreme as our original data.

Compared to the chained approach above, I consider this to be a more “honest” way to sample an empirical distribution, given the constraint of a (maximum) correlation threshold. I base this on E.T. Jaynes’ demonstration that, in the face of ignorance as to how a particular statistic was generated, the best approach is to maximize the (informational) entropy. The resulting solution is the most likely result you would get if you sampled from the full space (uniformly), then limited your results to those which match your criteria. Intuitively, this approach says: Of all the ways to arrive at a correlation of -0.32 or less, which are the most likely to occur?

For a more thorough discussion of maximum entropy approaches, see Chapter 11 of Jaynes’ book “Probability Theory” or his “Papers on Probability” (1979). Note that this is complicated, mind-blowing stuff (it was for me, anyway). I strongly recommend taking the time to understand it, but don’t bother unless you have at least an intermediate-level understanding of math and probability.

Here’s what the plot looks like subject to the correlation constraint:

If it looks similar to the other plots in terms of results, that’s because it is. Empirical p-value from 1000 trials? 0.55. Because generating samples with the required correlation coefficients took so long, these were the only trials I performed. However, the results after 1000 trials are very similar to those for 100,000 or a million trials, and with a p-value this high there’s no realistic chance of getting a statistically significant result with more trials (though feel free to try for yourself using the R code and your cluster of computers running Hadoop). In sum, the maximum entropy approach, just like the naive random walk simulation and the consecutive-year simulations, gives us no reason to doubt our default explanation of GW2 – that it is the result of random, undirected changes over time.

One more assumption to test

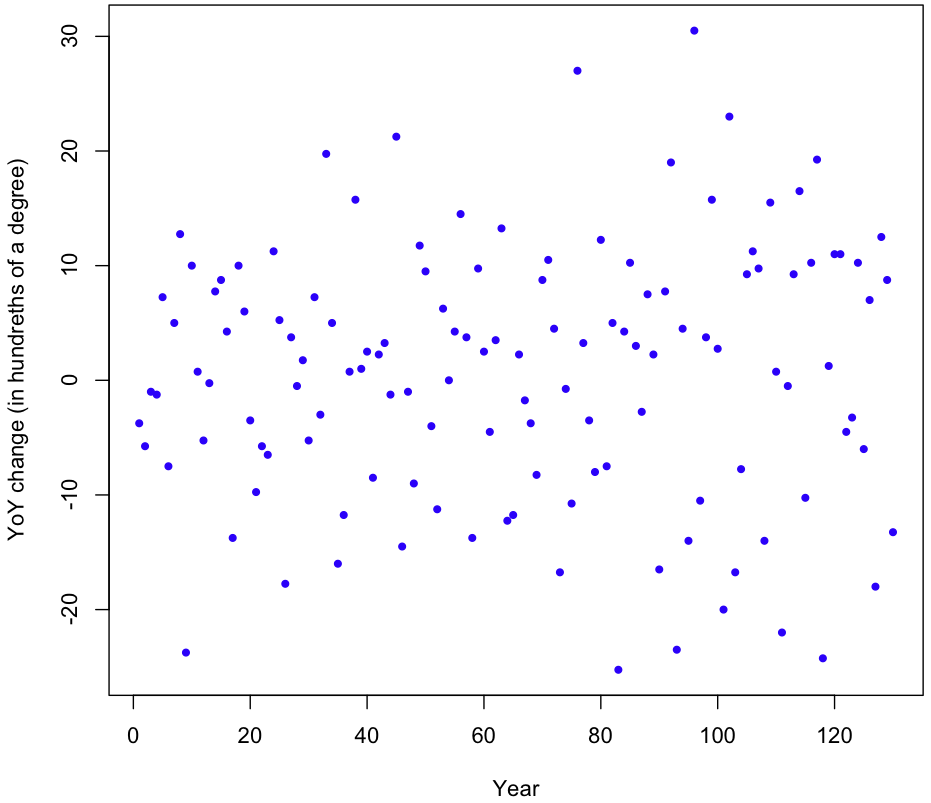

Another assumption in our model is that that YoY changes have constant variance over time (homoscedasticity). Here’s the plot of the (raw, uncentered) YoY changes:

It appears that the variance might be increasing over time, but just looking at the plot isn’t conclusive. To be sure, I took the absolute value of the changes and ran a simple regression on them. The result? Variance is increasing (p-value 0.00267), though at a rate that’s barely perceptible; the estimated absolute increase in magnitude of the YoY changes is 0.046. That figure is in hundreths of degrees Celsius, so our linear model gives a rate of increase in variability of just 4.6 ten-thousands of a degree per year. Over the course of 130 years, that equates to an increase of six hundredths of a degree Celsius (margin of error of 3.9 hundredths at two std deviations). This strikes me as a miniscule amount, though relative to the size of the YoY changes themselves it’s non-trivial.

Does this increase in volatility invalidate our simulation? I don’t think so. Any model which took into account this increase in volatility (while still being centered) would be more likely to produce extreme results under the null hypothesis of undirected change. In other words, the bigger the yearly temperature changes, the more likely a random sampling of those changes will lead us far away from our 13.8°C starting point in 1881, with most of the variation coming towards the end. If we look at the data, this is exactly what happens. During the first 63 years of data the temperature increases by 42 hundredths of a degree, then drops 40 hundredths in just 12 years, then rises 80 hundredths within 25 years of that; the temperature roller coaster is becoming more extreme over time, as variability increases.

Beyond falsifiability

Philosopher Karl Popper insisted that for a theory to be scientific, it must be falsifiabile. That is, there must exist the possibility of evidence to refute the theory, if the theory is incorrect. But falsifiability, by itself, is too low a bar for a theory to gain acceptance. Popper argued that there were gradations and that “the amount of empirical information conveyed by a theory, or it’s empirical content, increases with its degree of falsifiability” (emphasis in original).

Put in my words, the easier it is to disprove a theory, the more valuable the theory. (Incorrect) theories are easy to disprove if they give narrow prediction bands, are testable in a reasonable amount of time using current technology and measurement tools, and if they predict something novel or unexpected (given our existing theories).

Perhaps you have already begun to evaluate the GW claims in terms of these criteria. I won’t do a full assay of how the GW theories measure up, but I will note that we’ve had several long periods (10 years or more) with no increase in global temperatures, so any theory of GW3 or GW5 will have to be broad enough to encompass decades of non-warming, which in turn makes the theory much harder to disprove. We are in one of those sideways periods right now. That may be ending, but if it doesn’t, how many more years of non-warming would we need for scientists to abandon the theory?

I should point out that a poor or a weak theory isn’t the same as an incorrect theory. It’s conceivable that the earth is in a long-term warming trend (GW2) and that this warming has a man-made component (GW5), but that this will be a slow process with plenty of backsliding, visible only over hundreds or thousands of years. The problem we face is that GW3 and beyond are extreme claims, often made to bolster support for extreme changes in how we live. Does it make sense to base extreme claims on difficult to falsify theories backed up by evidence as weak as the global temperature data?

Invoking Pascal’s Wager

Many of the arguments in favor of radical changes to how we live go like this: Even if the case for extreme man-made temperature change is weak, the consequences could be catastrophic. Therefore, it’s worth spending a huge amount of money to head off a potential disaster. In this form, the argument reminds me of Pascal’s Wager, named after Blaise Pascal, a 17th century mathematician and co-founder of modern probability theory. Pascal argued that you should “wager” in favor of the existance of God and live life accordingly: If you are right, the outcome is infinitely good, whereas if you are wrong and there is no God, the most you will have lost is a lifetime of pleasure.

Before writing this post, I Googled to see if others had made this same connection. I found many discussions of the similarities, including this excellent article by Jim Manzi at The American Scene. Manzi points out problems with applying Pascal’s Wager, including the difficulty in defining a stopping point for spending resources to prevent the event. If a 20°C increase in temperature is possible, and given that such an increase would be devastating to billions of people, then we should be willing to spend a nearly unlimited amount to avert even a tiny chance of such an increase. The math works like this: Amount we should be willing to spend = probability of 20°C increase (say 0.00001) * harm such an increase would do (a godzilla dollars). The end result is bigger than the GDP of the planet.

Of course, catastrophic GW isn’t the only potential threat can have Pascal’s Wager applied to it. We also face annihilation from asteroids, nuclear war, and new diseases. Which of these holds the trump card to claim all of our resources? Obviously we need some other approach besides throwing all our money at the problem with the scariest Black Swan potential.

There’s another problem with using Pascal’s Wager style arguments, one I rarely see discussed: proponents fail to consider the possibility that, in radically altering how we live, we might invite some other Black Swan to the table. In his original argument, Pascal the Jansenist (sub-sect of Christianity) doesn’t take into account the possibility that God is a Muslim and would be more upset by Pascal’s professed Christianity than He would be with someone who led a secular lifestyle. Note that these two probabilities – that God is Muslim who hates Christians more than atheists, or that God is Christian and hates atheists – are incommesurable! There’s no rational way to weigh them and pick the safer bet.

What possible Black Swans do we invite by forcing people to live at the same per-capita energy-consumption level as our forefathers in the time of James A. Garfield?

Before moving on, I should make clear that humans should, in general, be very wary of inviting Black Swans to visit. This goes for all experimentation we do at the sub-atomic level, including work done at the LHC (sorry!), and for our attempts to contact aliens (as Stephen Hawking has pointed out, there’s no certainty that the creatures we attract will have our best interests in mind). So, unless we can point to strong, clear, tangible benefits from these activities, they should be stopped immediately.

Beware the anthropic principle

Strictly speaking, the anthropic principle states that no matter how low the odds are that any given planet will house complex organisms, one can’t conclude that the existence of life on our planet is a miracle. Essentially, if we didn’t exist, we wouldn’t be around to “notice” the lack of life. The chance that we should happen to live on a planet with complex organisms is 1, because it has to be.

More broadly, the anthropic principle is related to our tendency to notice extreme results, then assume these extremes must indicate something more than the noise inherent in random variation. For example, if we gathered together 1000 monkeys to predict coin tosses, it’s likely that one of them will predict the first 10 flips correctly. Is this one a genius, a psychic, an uber-monkey? No. We just noticed that one monkey because its record stood out.

Here’s another, potentially lucrative, most likely illegal, definitely immoral use of the anthropic principle. Send out a million email messages. In half of them, predict that a particular stock will go up the next day, in the other half predict it will go down. The next day, send another round of predictions to just those emails that got the correct prediction the first time. Continue sending predictions to only those recipients who receive the correct guesses. After a dozen days, you’ll have a list of people who’ve seen you make 12 straight correct predictions. Tell these people to buy a stock you want to pump and dump. Chances are good they’ll bite, since from their perspective you look like a stock-picking genius.

What does this have to do with GW? It means that we have to disentangle our natural tendency to latch on to apparent patterns from the possibility that this particular pattern is real, and not just an artifact of our bias towards noticing unlikely events under null hypotheses.

Biases, ignorance, and the brief life, death, and afterlife of a pet theory

While the increase in volatility seen in the temperature data complicates our analysis of the data, it gives me hope for a pet theory about climate change which I’d buried last year (where does one bury a pet theory?). The theory (for which I share credit with my wife and several glasses of wine) is that the true change in our climate should best be described as Distributed Season Shifting, or DSS. In short, DSS states that we are now more likely to have unseasonably warm days during the colder months, and unseasonably cold days during the warmer months. Our seasons are shifting, but in a chaotic, distributed way. We built this theory after noticing a “weirdening” of our weather here in Toronto. Unfortunately (for the theory), no matter how badly I tortured the local temperature data, I couldn’t get it to confess to DSS.

However, maybe I was looking at too small a sample of data. The observed increase in volatility of global YoY changes might also be reflected in higher volatility within the year, but the effects may be so small that no single town’s data is enough to overcome the high level of “normal” volatility within seasonal weather patterns.

My tendency to look for confirmation of DSS in weather data is a bias. Do I have any other biases when it comes to GW? If anything, as the owner of a recreational property located north of our northern city, I have a vested interest in a warmer earth. Both personally (hotter weather = more swimming) and financially, GW2 and 3 would be beneficial. In a Machiavellian sense, this might give me an incentive to downplay GW2 and beyond, with the hope that our failure to act now will make GW3 inevitable. On the other hand, I also have an incentive to increase the perception of GW2, since I will someday be selling my place to a buyer who will base her bid on how many months of summer fun she expects to have in years to come.

Whatever impact my property ownership and failed theory have on this data analysis, I am blissfully free of one biasing factor shared by all working climatologists: the pressures to conform to peer consensus. Don’t underestimate the power of this force! It effects everything from what gets published to who gets tenure. While in the long run scientific evidence wins out, the short run isn’t always so short: For several decades the medical establishment pushed the health benefits of a low fat, high carb diet. Alternative views are only now getting attention, despite hundreds of millions of dollars spent on research which failed to back up the consensus claims.

Is the overall evidence for GW2 – 9 as weak as the evidence used to promote high carb diets? I have no idea. Beyond the global data I’m examining here, and my failed attempt to “discover” DSS in Toronto’s temperature data, I’m coming from a position of nearly complete ignorance: I haven’t read the journal articles, I don’t understand the chemistry, and I’ve never seen Al Gore’s movie.

Final analysis and caveats

Chances are, if you already had strong opinions about the nine faces of GW before reading this article, you won’t have changed your opinion much. In particular, if a deep understanding of the science has convinced you that GW is a long term, man-made trend, you can point out that I haven’t disproven your view. You could also argue the limitations of testing the data using the data, though I find this more defensible than testing the data with a model created to fit the data.

Regardless of your prior thinking, I hope you recognize that my analysis shows that YoY temperature data, by itself, provides no evidence for GW2 and beyond. Also, because of the relatively long periods of non-warming within the context of an overall rise in global temperature, any correct theory of GW must include backsliding within it’s confidence intervals for predictions, making it a weaker theory.

What did my analysis show for sure? Clearly, temperatures have risen since the 1880s. Also, volatility in temperature changes has increased. That, of itself, has huge implications for our lives, and tempts me to do more research on DSS (what do you call pet theory that’s risen from the dead?). I’ve also become intrigued with the idea that our climate (at large) has mechanisms to balance out changes in temperature. In terms of GW2 itself, my analysis has not convinced me that it’s all a myth. If we label random variation “noise” and call trend a “signal,” I’ve shown that yearly temperature changes are compatible with an explanation of pure noise. I haven’t shown that no signal exists.

Thanks for reading all the way through! Here’s the code:

Code in R

theData = read.table("/path/to/theData/FromNASA/cleanedForR.txt", header=T)

# There has to be a more elegant way to do this

theData$means = rowMeans(aggregate(theData[,c("DJF","MAM","JJA","SON")], by=list(theData$Year), FUN="mean")[,2:5])

# Get a single vector of Year over Year changes

rawChanges = diff(theData$means, 1)

# SD on yearly changes

sd(rawChanges)

# Subtract off the mean, so that the distribution now has an expectaion of zero

changes = rawChanges - mean(rawChanges)

# Find the total range, 1881 to 2011

(theData$means[131] - theData$means[1])/100

# Year 1 average, year 131 average, difference between them in hundreths

y1a = theData$means[1]/100 + 14

y131a = theData$means[131]/100 + 14

netChange = (y131a - y1a)*100

# First simulation, with plotting

plot.ts(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3, xlab="Year", ylab="Temperature anomaly in hundreths of a degrees Celsius")

trials = 1000

finalResults = rep(0,trials)

for(i in 1:trials) {

jumps = sample(changes, 130, replace=T)

# Add lines to plot for this, note the "alpha" term for transparency

lines(cumsum(c(0,jumps)), col=rgb(0, 0, 1, alpha = .1))

finalResults[i] = sum(jumps)

}

# Re-plot red line again on top, so it's visible again

lines(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3)

# Fnd the fraction of trials that were more extreme than the original data

( length(finalResults[finalResults>netChange]) + length(finalResults[finalResults<(-netChange)]) ) / trials # Many more simulations, minus plotting trials = 10^6 finalResults = rep(0,trials) for(i in 1:trials) { jumps = sample(changes, 130, replace=T) finalResults[i] = sum(jumps) } # Fnd the fraction of trials that were more extreme than the original data ( length(finalResults[finalResults>netChange]) + length(finalResults[finalResults<(-netChange)]) ) / trials # Looking at the correlation between YoY changes x = changes[seq(1,129,2)] y = changes[seq(2,130,2)] plot(x,y,col="blue", pch=20, xlab="YoY change in year i (hundreths of a degree)", ylab="YoY change in year i+1 (hundreths of a degree)") summary(lm(x~y)) cor(x,y) acf(changes) # Try sampling in 2-year increments plot.ts(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3, xlab="Year", ylab="Temperature anomaly in hundreths of a degrees Celsius") trials = 1000 finalResults = rep(0,trials) for(i in 1:trials) { indexes = sample(1:129,65,replace=T) # Interlace consecutive years, to maintian the order of the jumps jumps = as.vector(rbind(changes[indexes],changes[(indexes+1)])) lines(cumsum(c(0,jumps)), col=rgb(0, 0, 1, alpha = .1)) finalResults[i] = sum(jumps) } # Re-plot red line again on top, so it's visible again lines(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3) # Find the fraction of trials that were more extreme than the original data ( length(finalResults[finalResults>netChange]) + length(finalResults[finalResults<(-netChange)]) ) / trials # Try sampling in 3-year increments trials = 100000 finalResults = rep(0,trials) for(i in 1:trials) { indexes = sample(1:128,43,replace=T) # Interlace consecutive years, to maintian the order of the jumps jumps = as.vector(rbind(changes[indexes],changes[(indexes+1)],changes[(indexes+2)])) # Grab one final YoY change to fill out the 130 jumps = c(jumps, sample(changes, 1)) finalResults[i] = sum(jumps) } # Fnd the fraction of trials that were more extreme than the original data ( length(finalResults[finalResults>netChange]) + length(finalResults[finalResults<(-netChange)]) ) / trials # The maxEnt method for conditional sampling lines(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3) trials = 1000 finalResults = rep(0,trials) for(i in 1:trials) { theCor = 0 while(theCor > -.32) {

jumps = sample(changes, 130, replace=T)

theCor = cor(jumps[1:129],jumps[2:130])

}

# Add lines to plot for this

lines(cumsum(jumps), col=rgb(0, 0, 1, alpha = .1))

finalResults[i] = sum(jumps)

}

# Re-plot red line again on top, so it's visible again

lines(cumsum(c(0,rawChanges)), col="red", ylim=c(-300,300), lwd=3)

( length(finalResults[finalResults>74]) + length(finalResults[finalResults<(-74)]) ) / trials

# Plot of YoY changes over time

plot(rawChanges,pch=20,col="blue", xlab="Year", ylab="YoY change (in hundreths of a degree)")

# Is there a trend?

absRawChanges = abs(rawChanges)

pts = 1:130

summary(lm(absRawChanges~pts))

Tags: global warming, NASA, pascal's wager

Very good piece. Do you see the variance/time it is lower at end.

Hi,

Thanks for the making the code available. Could you maybe expand on the idea that the correlation between YoY is not regression to the mean?

In random data, you see a negative correlation for the YoY change.

x <- rnorm(1000)

dx <- diff(x)

plot(dx[-length(dx)], dx[-1])

cor.test(dx[-length(dx)], dx[-1])

Thanks,

G

Sure Gio. For those who don’t know what we’re talking about, “regression to the mean” is the tendency for extreme deviations from the model to be followed by less extreme deviations. To return to the example of the monkeys flipping coins: The better a monkey does during its first 10 predictions, the more likely it is to do worse (closer to the mean of 0.5) on its next 10. Likewise, a very unlucky monkey will probably “improve” its prediction powers on the next 10 guesses as it “regresses to the mean” of 0.5. Shameless plug: I slipped in a reference to regression to the mean in my comic (that page isn’t shown in the samples).

This (my analysis) is not a case of regression to the mean because the mean changes each time to be the previous year’s temperature, and we are examining all the monkeys. For example, if you create the following random walk:

x = rnorm(1000)

y = cumsum(x)

plot.ts(y, col=”blue”)

Then your YoY changes are the (IID) x’s, and hence uncorrelated. Try:

cor(x[1:999],x[2:1000])

or

cor(x[seq(1,999,2)],x[seq(2,1000,2)])

and you should get a very low observed correlation coefficient.

EDIT: I should clarify that if we JUST looked at the most extreme individual YoY changes, then these would be likely to be followed by a less extreme change. However, we’re looking at the full emperical distribution of YoY changes.

@J. Li:

Do you mean that variance begins to go back down in the final years? I’ll look into that.

Great post.

Thanks! I have been trying to explian some of this to my son and this post is awesome! Richard Feynman had some great discussions on the concept that we lend too much credence to a theory and then alter the results to match – basically throwing away any data that does not match our theory as an outlier and thereby decreasing knowledge because we skew the model. It has been difficult to get past the political and financial stress on this topic so I appreciate your appeal to statistics.

Thom … I appreciate your reference to Richard Feynman!

Here’s what you’ve done:

1) Construct a straw man argument for anthropogenic global warming.

2) Admit that you know essentially nothing about climate science or about the arguments that actual people have put forth for AGW.

3) Discover that your straw man argument fails (surprise!)

4) Slap a provocative title on your post and call it a day.

If you were a journalist or an academic this would be negligent. As a blogger, I guess it’s a good strategy because it got you some page views.

Hi Mike,

What “straw man” are you referring to? Unless you consider the global temperature data itself to be orthogonal to the theory of anthropogenic GW, analyzing its strength is relevant.

I believe the “straw man” here is that global temperatures should not follow a random walk in the event of GW2. The problem with this standard is that the random walk is not a valid model of the earth’s climate. Here’s a post featuring a debate between statisticians and physical scientists on exactly this topic. I’m certain by even superficially consulting an expert you could find further work in this area, but if you fear for your own ability to stand up in the face of “peer concensus” I advice against reading any topical work on the matter.

On the question of peer concensus, the tools of science (which you happily disregard) are specifically designed to combat this type of groupthink. While you’re right there are cases where it has failed, the number of successes attributable to the scientific method are too numerous to name.

Failed to attach link mentioned above.

http://ourchangingclimate.wordpress.com/2010/03/08/is-the-increase-in-global-average-temperature-just-a-random-walk/

Hi Isaac,

Thanks for the link. I put aside my fears and read some of Bart Verheggen’s posts (the person you linked). When he says things like: “Temperatures jiggle up and down, but the overall trend is up: The globe is warming” based on running averages and, even worse, crap like this

http://ourchangingclimate.files.wordpress.com/2010/03/global_temp_yearly_p15_trendline_tavg_incl_txt_eng.png?w=675&h=441

what he’s are really saying is, “See, the chart’s going up!” Those assertions are what got me interested in this subject to begin with, to see if the data provide strong evidence for something other than “jiggling up and down.”

Not sure if you’ve heard of “technical analysis” in the financial markets. Proponents draw all kinds of trend lines over stock prices to show it’s going up or down. There’s a wonderful part in the book “A Random Walk Down Wall Street” where he shows a chart of coin flips to a technical analyst who goes nuts and says “We’ve got to buy immediately. This pattern’s a classic. There’s no question the stock will be up 15 points next week.”

Again, this isn’t to say GW2 and up are fabrications, it’s to say that we need to be cautions about our human biases, and careful in assessing the strength of our theories.

You are missing the point

http://julesandjames.blogspot.se/2012/11/polynomial-cointegration-tests-of.html

http://rabett.blogspot.jp/2010/03/idiots-delight.html

http://tamino.wordpress.com/2010/03/11/not-a-random-walk/

Now why would you try to explain away physics like this without reading up on it?

I think Mike has something here…

Get a refereed paper o this, and you will be number 25.

Good luck.

http://www.treehugger.com/climate-change/pie-chart-13950-peer-reviewed-scientific-articles-earths-climate-finds-24-rejecting-global-warming.html

I would have titled this a surprisingly weak analysis of global temperature trends.

Have a look at http://berkeleyearth.org/

Nice post. I’d like to see how well the assumption that the distribution of temperature changes is stationary holds up. Here’s one simple test: break the data into two time periods, e.g. pre-1950 and post. Then, sample only the shuffled temperature changes during the pre-1950 period and see how typical our run up was. Then repeat in the second period.

Another good thing to try would be a kernel density estimator for a piecewise linear model.

Basically, what it looks like to me is that assuming all different year to year changes were fair game to hypothetically be realized at any point back to 1881 is invalid. There will be many paths that randomly get modern-grade large YoY changes more often, artificially inflating the probability mass living above the red curve in your plots.

Your rough attempts to correct for the correlations goes a small way to studying this. But if there was some kind of feedback system making a totally different regime in the last 20 of the years, with a far different YoY distribution at that end, then just modeling first or second order correlation would not be enough to beat down that effect.

I appreciate the analogy to “technical analysis” from finance in your comment too. I agree that a lot of mainstream climate research is just marketing and professing/cheering. Don’t fall victim to any biases on this though. Read, e.g. Imbens on kernel density estimators and see if any more complex models that don’t assume so much stationarity yield good fits.

I’m a little confused here, why do we expect global climate to follow a random walk process? The strong first-order autocorrelation you’re seeing there is evidence for one of two things:

1. Last year’s climate is affecting the current year’s climate.

2. There’s a secular trend in the data!

Modelling the data as a random walk is appropriate if we believe option 1 is true. But the basics of climate science would suggest otherwise; predictable forcing (El Nino, solar cycle, GHG effect) layered on top of base geographical/biological climate patterns are the main drivers of climatic trends. While these effects are autocorrelated by nature, climate data itself is not fundamentally so.

I like your analysis (thank you for the code!), but it can’t distinguish between a real trend in stationary data and a random-walk process. If the strength of the external trend increases, the auto-correlation increase and the random-walk simulation produces more extreme results.

This is a great example how misapplication of statistical techniques can lead to nonsense and meaningless results.

Under the null-hypothesis presented here, extreme shifts in global temperature are commonplace, with 44% percent of 130 year periods under the model experiencing a temperature shift of magnitude greater than 1 degree. To put this in perspective, the 600 year shift from the “medieval warm period” to the “little ice age” was about this magnitude. There were no 130 year periods that experienced anywhere near this kind of shift.

There is little more going on here than garbage-in, garbage-out. Please refrain from posting this junk on R-bloggers.

Thanks for this. Very interesting. The big assumption you didn’t address is the arbitrary choice of start date. What if you repeated the analysis using just the last 30 or 50 years? Perhaps that’s when CO2 emmissions really accelerated.

The staring date was arbitrary, but it wasn’t my choice. 1881 is the first full year for the NASA data.

Just a quick quote from here

“Fundamentally, the argument that a time series passes various statistical tests indicating consistency with a random walk, tells us nothing about whether it actually was generated by a random process. Especially when we happen to have very good reasons to believe that it was not… “,

but that is noted, in principle, in your post.

First the math part: I have an honest question as someone who has not used Monte Carlo methods very much. Since you believe we have (negative) autocorrelation in the sequence of increments (and we better, otherwise the scaling limit is Brownian motion which has undesirable asymptotic behavior, to put it lightly), why do you still believe the empirical cdf? Without independent sampling, the ecdf might be a bad estimate of the “true distribution” of increments, and unless I’m mistaken the Monte Carlo methods you’re using all rely on treating the ecdf as an estimate of the distribution of increments.

Second, some science: briefly, you have done no science here- nothing, zip, nada. You found a (somewhat interesting) probabilistic model and concluded that this data is consistent with your model. Of course, there are many models for which the data are consistent. To apply Occam’s razor honestly you would have to posit that there exists something in nature called randomness and that thing is generating our global average temperatures, and that’s even sillier than it sounds.

This type of argument is wonderful for refuting technical analysts (especially when you set them up by showing them an actual random walk) precisely because what they do is not science either. They aren’t trying to understand anything, only predict it. Science actually tries to understand things and measures its success at doing so by its ability to predict.

Any conversation about global warming has to start with physics, not random walks. Your probabilistic model has to be constrained by known physical laws otherwise it’s not an honest model.

Third, on groupthink / peer-pressure / consensus: why do people always assume the scientific community is more likely to welcome research that confirms what it already believes? Is there any evidence for this sociological “fact”? I think scientists are actually quite excited when unexpected things happen. If someone can actually demonstrate, conclusively, that an accepted scientific theory is false, I think the community reveres that person. All great discoveries are falsifications/modifications of the previously most accepted theory. If someone conclusively refuted anthropogenic climate change they would be given a Nobel Prize, and most powerful governments and corporations in the world would be indebted to them…

Hi Matt,

As someone who is (at the moment) as agnostic as you are on this issue I appreciate a statistics-driven approach to this problem.

I was wondering if you had a chance to look at the BEST dataset (http://berkeleyearth.org/dataset/) yet, and if you’d like to comment on their analysis/conclusion?

You are confusing things which have a central limit with things which are purely a function of n(n-1) i.e. your random walk.

Over the past few days my weight has varied up and down half a pound or so in the mornings. However taking this variance – and without some drastic change in my behaviour it would be highly unlikely that my weight would go on a random walk up or down a stone or two.

There is a homeostatic mechanism that tends to keep my weight steady though varying around a level- it’s not just an iteration of the day before. Indeed if I suddenly started to lose pounds of weight without any change in exercise/diet I would worry about this surprising trend and go to see a doctor to examine potential underlying causes.

I’m sure with a moments thought you understand that global temperature is more like a homeostatic or centrally limited phenomena than that which you describe here?

Just want to chime in/pile on here. This is a very nice writeup of a completely inappropriate statistical model. The _last_ thing you’d expect surface temperature to look like is a random walk. Given the extremely consistent nature of the energy input over the millenia, you’d actually expect the data to look like a fixed (NON-drifting) value plus some sort of probably-normal noise. There’s a little bit more correlation than that because of things like volcanoes and oceanic cycles, and on multi-thousand year scales due to both solar and orbit changes, but that’s it. Any sort of multi-decadal trend in the data, such as what’s been observed over the last 50 years, is evidence that the dynamics of the system are changing. The well-documented change in CO2 in the atmosphere over that same time period, with a well understood mechanism for driving temperature, is by far the likeliest explanation at this point.

This is a nice post.

Have you looked at previous work on this?

There is a paper by A. H. Gordon,

“Global Warming as a Manifestation of a Random Walk”

published back in 1991, which was discussed by statistician Matt Briggs.

There is also this more recent paper on random walks in climate data.

Some bloggers have also run random walk simulations, see for example this one and links therein.

You are, of course, not the first statistician to look into global warming and find that the case is weak.

Matt Briggs has been saying this for years on his blog.

A significant recent paper is

“A statistical analysis of multiple temperature proxies: Are reconstructions of surface temperatures over the last 1000 years reliable?” by McShane and Wyner, 2011. The answer is no – eg they say ‘We find that the proxies do not predict temperature significantly better than random series generated independently of temperature.‘

This paper addresses a GW claim which you do not have in your list:

0. The current level of global temperature and the rate of change of temperature are unusual in historical terms.

(Links omitted to avoid going into moderation purgatory like previous comment).

You’ve done something wrong, but I’m not sure what.

If you take temps from 1980 to present, and temps from 1880 – 1980, you’ll find the average of the two periods is different. If you then shuffle all the temps and take the average of the first 40 (1980 – present) and compare that to the average of the rest (1880 – 1980), you’ll find that its very rare to get as big a difference as we get in reality. Which shows things are getting warmer, and doing so in a very non random way.

That your method does not reach the same conclusion suggests it is faulty. Though you may have accidentally shown something else interesting 🙂

Oh my, can not believe that ppl are taking this serious ad some math and ppl go crazy. Just look at the possible temperature changes doing this… and what would they be running through the whole lifetime of the earth. Physics beet nonsense all the time…

Matt,

Congratulations, this is an excellent post for illustrating why statisticians should not analyze data they don’t understand. All you have shown is that the noise in the data from year to year is larger than the trend from year to year. Your own analysis shows that the data are not homoscedastic, and yet you plod on–oblivious of the differences between different periods in your dataset. And then, despite not understanding the science or the dataset and despite your analysis not supporting your model, you state a strong conclusion contradicting that of the experts. Bravo. Might I interest you in looking into the research of the good Doctors Dunning and Kruger.

Yes we should leave the predictions to the climatologists since they are “scientists” their predictions are so good, right?

http://anthonyvioli.wordpress.com/2012/10/22/quick-post-about-failed-global-warming-predictions/

Maybe their predictions would be better if they understand the “science” of math instead.

This is an excellent blog post. It’s nice to see someone enquire into something in such an open way. I am sure that there are other people doing the same research in far more sophisticated ways – however I’ve never seen someone do so with the same transparency and at a level I can hope to understand.

On a negative point, it’s sad to see people knock your work because the conclusion disagrees with their beliefs. It disagrees with mine too, but I will seek to work out whether there is a flaw in your argument, or I am wrong.

Well done. I will be back here again 🙂

People are knocking it because his assumptions violate physics, not because his conclusions disagree with theirs.

Namely: the statistical model assumes that the earths temperature could be determined by a random walk process – it could not.

Hi Andrew, this is an argument against the very first section of the posting, which he then rejects. In the second section he looks at autocorrelations. Could the earth’s temperature not be determined by an autocorrelated process? Is the ornstein-uhlenbeck process not an example of an autocorrelated process which is mean reverting?

Matt,

One way to see that your analysis is incorrect is that it would consider all but the deepest excursions in Glacial/Interglacial periods as not significant at the 90% CL. You can draw whatever conclusions you wish from this, but I’d start to question my model.

You show that it is hard to reject your random walk null hypothesis using one particular test. Perhaps that means that the statistical test is a poor tool. Have you tested if the statistical tool works on the simulated data from a virtual world where we know that there is a strong forced signal?

It is reasonably easy to download such simulated global temperature data from the knmi climate explorer.

What a lovely post! Statistics mixed with philosophy, well set out and delightfully well written.

It reminded me of the rather infamously long VS/Bart thread – link below – which is too long to read but the statistician VS comments are worth a look http://ourchangingclimate.wordpress.com/2010/03/01/global-average-temperature-increase-giss-hadcru-and-ncdc-compared/

– J. Li is correct, variance has declined over the past couple decades.

– Of course GW won’t be an unbounded random walk, but this doesn’t establish a priori that short term changes, or even changes over the course of hundreds of years, couldn’t be viewed this way.

– The advantage of my ignorance of the science is that I can offer an independent analysis of the data. I thought this would be clear, but apparently not. If you are working off the same models and assumptions everyone else is using, it’s hard to come to a different conclusion.

– Alfredo (a commenter) posted a chart which shows that out of 13,950 peer-reviewed articles on climate since 1991, only 24 challenged the orthodox view. I thought at first this was a clever way to show the stifling prevalence of groupthink, but apparently this is being presented to prove that skeptics (of GW2? GW3? of any GW claim?) are “climate deniers” who should be dismissed outright. A question for you, the readers: do you believe the GW claims (from 2 on up) are 99.8% certain to be true? If so, which ones?

– A few of the comments and emails use an argument of the form: “We caused it, therefore it’s a trend.” This uses an assertion about causality to prove that an effect exists. Not good.

– I appreciate all of the comments, even the critical ones (the point about bounds to temperature moves is especially well taken, and I should have addressed this directly in the piece). However, no one seems willing to address the problems of falsifiability or the implied weakness of theories which are compatible with such a wide range of temperature outcomes.

Your problem here is that you’re not doing science, you’re just looking at a time series. Science includes theory and domain expertise, which you’ve chosen to ignore. Relatively simple physics says that the proper null hypothesis for the simplest possible model is a fixed temperature over time (plus noise), not a random walk. A random walk makes a specific mechanistic assumption about how the data was generated, and you’re not proposing any such thing, so don’t use it as a null hypothesis.

I’d advise you to delete this post. This is the sort of naive use of statistics that future employers may see and think poorly of.

Matt, as you are in Toronto I expect you are aware of Steve McIntyre and his blog, which often discusses statistical aspects of climate science. He wrote a post back in 2005 on random walks, with negative autocorrelation, and autoregression to deal with the boundedness question, complete with R code.

What you show here is that IF we assume that annual mean global temperature follows a random-walk, then it’s not that improbable that such a walk would produce the trend found in the temperature record since 1881. However, that just doesn’t show that your random walk hypothesis is a serious alternative to GW2. There’s some physics involved here, including the fact that a warmer earth, other things equal, emits more energy to space. But the random walk model assumes that there is no increase in the probability of a decline in global temperature when the prior year’s temperature was higher (and, on the other hand, no increase in the probability of a temperature increase when the prior year’s temperature was lower). I, for one, am glad that’s not how our planet’s climate actually works!

My, my. An “independent analysis”? You’re messing with physics here, chum. While the micro world is Heisenberg, the macro world is not. Effects have deterministic causes. That quants could crash the global economy at their whim is further proof. Finding the causes is not a statistical exercise, but a physical one. It’s just not always easy to ferret out the causes. In the case of global warming there are two facts (not theories) which matter:

– CO2 is a greenhouse gas, and larger amounts in the atmosphere raise the globe’s temperature

– humans have been loading up the atmosphere with CO2 **in addition** to any other natural sources

That there have been fluctuations, of duration longer than a few human generations, in earth’s temperature over the last 5 billion years doesn’t mean that **only** these previous causes are the **sole causes**. That’s just sophistry. The physics wins.

Further testing of assumptions is needed. In particular, a straight-forward ADF test with trend suggests that temperature changes are:

1) increasing over time;

2) negatively correlated to temperature levels (!);

3) uncorrelated to lagged temperature changes (once the effect of (2) is accounted for)

Only (3) should happen if indeed the temperature series is a random walk. If you ran your simulation analysis allowing for changes’ correlation with levels as well, your p-values would be much smaller.

I thought this was a great read, especially the in-depth discussion of your assumptions and methods. As a scientist myself, I often feel the need for more discussion of our own potential shortfalls than is usually presented in the academic journal format. I really appreciate this post.

[Disclaimer: I personally don’t come down on either side of the global warming debate.]

Regarding your methods:

I do agree with some of the commenters that a random walk is probably not the best approach when we’re talking a system so complex as surface temperature. However, just using averages over two spaces of time is not so strong, either. Neither approach allows for the complex interactions of weather patterns, solar effects, and the giant unpredictable heat sink that is our oceans. When the ozone was found to be eroding, two scientists predicted opposite results: one predicted warming where part of Earth’s heat would be reflected inward instead of escaping, and the other predicted cooling where part of the sun’s heat would be reflected away from the Earth. When a nominal increase in Earth’s average measurable surface temperature was discovered, the first theory was assumed to be completely correct. However, it is possible that both theories are correct to different degrees, and unfortunately, we currently have no way of checking.

Regarding your comment about the inverse relationship between years:

From a climate science view, this may not really make sense, but from a purely thermodynamics view, this is expected if our planet has a partial feedback loop. Due to the crazy heat capacity of water, the oceans in our planet can absorb an enormous amount of heat without us being able to detect the change. If the temperature rises, it would be expected for some heat to be absorbed by the oceans, which over time would decrease the ambient temperature. As the temperature decreases, the oceans would release some/all of the captured heat. In a closed loop, this would eventually reach steady state, but with the Earth being exposed to changing influences like the sun, moon, and rotation, the pendulum would continue to swing back and forth indefinitely. As I stated, though, this only makes sense from a purely thermodynamics perspective.

I disagree with your analysis on statistical grounds. Your methods will find no trend in even the strongest cases. see: http://blog.fellstat.com/?p=304

Ian, here is a simple example of applying the same method to data with noise and a trend. It yields a p-value of 0:

# Create random data with a clear trend plus noise

for(i in 2:1000) {

temps[i] = temps[(i-1)] + 1 + rnorm(1)

}

# What is the final amount it climbed?

observedClimb = temps[1000]

rawChanges = diff(temps)

changes = rawChanges – mean(rawChanges)

plot.ts(cumsum(rawChanges), col=”red”, ylim=c(-1100,1100), lwd=3)

trials = 1000

finalResults = rep(0,trials)

for(i in 1:trials) {

# Sample from the centered changes

jumps = sample(changes, 1000, replace=T)

# Add lines to plot for this

lines(cumsum(jumps), col=rgb(0, 0, 1, alpha = .1))

finalResults[i] = sum(jumps)

}

length(finalResults[finalResults>observedClimb]) / trials

Now you put in a huge trend- the expected yearly increase is equal to the variance of the yearly noise. Just because you can simulate examples extreme enough that your method still rejects them does not mean that your method is correct for the original problem.

There are two or three huge problems that you still haven’t addressed. First, your null model is the wrong kind of null model- as mentioned by other commenters it should be noisy fluctuation about a mean and not a random walk.

Second, even if we accept the premise of using a time series model like AR1, there are issues with the way you are simulating the increments (stationarity is a strong assumption which others have pointed out does not seem to hold, and I mentioned that you are bootstrapping data which you admit is not independent).

Third, “The fact that I don’t know any of the science just means that my analysis is independent” is not a valid reason for making important conclusions based on models which ignore physical constraints.

Joshua I’m not sure you understand significance testing. If you want the p-value to be higher than 0, then reduce the trend relative to the variance and re-run the code. If you want a fully non-significant result, set the trend component to zero (note, even then you’ll get a p-value of below 0.05 once every 20 times – that’s how this kind of testing works). My code showed that Ian’s assertion – “Your methods will find no trend in even the strongest cases” – is flat out wrong; in the “strongest cases” my method finds the strongest possible evidence.

More broadly, all random walk simulations (which this is not – read the extensive section on accounting for non-independence, and note that this is not an AR1 simulation) are bounded, as is this simulation. In the real world everything is bounded. I’m not testing the data against a platonic ideal of a random walk; I’m doing an empirical test to see if you could get equally extreme results with no trend (but equal variance and correlation).

Beyond that, I’m not sure why people are so threatened by a pure data analysis approach to the data. If the scientific theories of CO2 convinced you of GW2 and beyond, so be it. I said as much in my post. But if you start by with a model that essentially draws trend lines over temperature movements (ie the “technical analysis” nonesense), you shouldn’t be upset when someone finds the data compatible with other explanations.

Also (not to pick on you, Joshua), I’m still waiting for people to state which of the GW claims they believe and how strongly they believe them. And, while we’re at it, how about an honest assessment of the degree of falsifiability of your theories, and how the strength of these theories is impacted by the clear non-warming periods, even well into the era of industrial CO2 emmissions?

Don’t worry about my understanding. Your example refutes Ian’s wording of “even in the strongest of cases.” That was just poorly chosen wording on his part. My point still remains- run your code with 130 time steps and an expected yearly change of 1/10th the noise level and you will lose significance. That would be a more honest comparison, and your method fails to detect the trend.

Why do you say your simulation is not a random walk? It is a random walk with non-independent increments. And the point is that a random walk is a very bad model for temperatures because of its limiting behavior. To consider how bad of a model it is, consider that the random walk has already been in operation for hundreds of millions of years without causing the extinction of all life. This is not just some platonic or purely mathematical objection. You’re cheating by choosing the wrong kind of model for the phenomenon in question.

Nobody is threatened by your ability to arrive at the wrong conclusions by applying the wrong kind of model. Climate scientists are not like day-trading “technical analysts.” They are actual scientists who study the physical world. In this analogy, YOU are the technical analyst ignoring the real world, telling people not to buy because you failed to detect a certain pattern which has nothing to do with the fundamentals. Climate scientists are like a large community of economists, business analysts, accountants, and so on, who have all arrived at the same conclusion after carefully studying mountains of evidence from countless sources.

And I’ll bite: GW1-4 are almost certainly correct. For 5 I don’t know enough to say whether or not humans are the *most* significant, but I am almost certain that they are an important cause. 6 is also worded poorly so I cannot agree with it outright- but we probably have the capacity to stop the trend if not actually reverse it. The main barriers to 7 are political and that may become the greatest and most absurd tragedy in the history of our species so far. 8 is also worded poorly- unintended consequences always occur, the question is whether they will be more damaging and the answer is no. As for 9, if there are technological fixes that are actually proven to be safe then we should probably use them too, and waiting is too risky. As for falsifiability, the theory makes many falsifiable predictions. So far many of those predictions have apparently been too conservative. And unfortunately I think we are doomed to find out about the rest of them in time.

This establishes nothing. All you have shown is that the current temperature is not extreme (in the sense of being high) IF temperature is a random walk. Which is obvious to everyone who knows what a random walk is (the variance goes to infinity with the length of the time series). A mere sensible way of asking if the data is consistent with a random is to ask how likely a random walk is to generate a temperature path similar to the data. Say all points within 0.5 or 1 degree.

I did a quick CADFtest on your data:

CADFtest(theData$mean, type=’trend’)

ADF test

data: data$mean

ADF(1) = -4.4562, p-value = 0.002576

alternative hypothesis: true delta is less than 0

sample estimates:

delta

-0.353847

It seems that the hypothesis that the time series has a unit-root is strongly rejected. I guess that’s a strong evidence that assumption of temperature behaving like random-walk is a poor one.

Thanks alefsin, I was looking for a test like this.

Sune’s suggestion (as I understand it) makes good sense: what does assuming it’s a random walk imply about what we should expect regarding global average temperatures over time? Testing this against past temperature records (instrumental or proxy) would give a direct test of this hypothesis. My prediction: it fails. A vanishingly small proportion of random walks would show the kind of long-run stability our climate displays, since random walks (as the posted graph shows) tend to wander far from the starting point over time…

Yep, Bryson Brown has nailed it. Run this type of random walk for 100,000 years, and you’ll have significant probabilities for a whole range of scenarios that will *never* happen. Therefore a random walk it isn’t.

No, a random walk it is, it just ain’t no damn model of climate. The problem with this sort of approach is, as Andy Lacis points out, that climate series are subject to all sorts of annoying limitations on excursions such as conservation of energy.

I appreciate your analysis in this area. This field is difficult and in general suffers from

1. Poor definition and justification of the concept of a global temperature (how is it valid to combine disparate non equally spaced measurements taken at different times)

2. Poor cross validation of models

3. Poor predictive power of models

4. Promotion of the model itself as the science

5. Ad hominum attacks on heretics who question the consensus (good examples above)

The science was after all settled that gastric acid causes stomach ulcers …

First: When you claim that the negative 1-year autocorrelation is NOT regression to the mean, you’re wrong. The real climate system is hugely complex, and many of its components are dynamically unstable. That means that global temperatures are constantly overshooting and undershooting the equilibrium point, which is the ultimate cause of the short term variation in global temps. Those short term fluctuations are just that: regression to the mean global temperature. The problem is, the mean itself is changing, because the energy conditions that cause the temperature are changing. And ultimately conservation of energy requires the temp to come back home to where it “belongs”. Random walks do not have that real-world constraint, which is why your “simulation” doesn’t actually simulate the real world.

Nice try, though.

SECOND: If you want to take that real-world constraint into account (and that constraint is Conservation of Energy, which is a pretty big thing to ignore), instead of making the random walk non-independent (i.e., each year starts where the previous year ends) make it independent instead (i.e., each year starts at the defined overall mean — which in this case is the mean global temp as defined by CoE).

I’m guessing that you’ll have to run a whole lot of simulations to find any year that’s 7 standard deviations above the mean. Unless, of course, you add a trend. Which very nicely proves GW2.

I don’t know if it’s been mentioned in any of the articles posted, but using land temperature readings tends to understate the magnitude of temperature shift over time. The greater concentration of additional heat in the system is in the oceans, by a pretty sizable margin. You really need to use ocean temperatures to get a proper assessment.

Thank you for this. I often stand in awe at how much time I spend learning about something actually not learning about it.

This post is absolutely ridiculous. The earth’s temperature is a physical quantity that results from a radiative energy balance with space. The idea that this could be modeled with a ‘random walk’ model is just absolutely false. Please try to model a physical system with physics – not with a random number generator. If the earth’s temperature could be approximated with a random walk then it is obvious from your above graphs that the temperature would have long ago wondered out to an extreme value incapable of supporting life.

I’m very disturbed by the number of people complimenting Matt Asher for a nice analysis that is in reality anything but, for reasons clearly and repeatedly explained by numerous commenters above.

Asher has made a fundamental error of assumption of the physics involved, and is modelling something that does not occur in this universe. It’s no different to setting a sniffer dog to catch a prisoner, and all it does is track a fox across the county. Doesn’t matter how well the dog sniffs – if it can’t follow the correct scent it’s no better than a host for fleas.

I agree with Harlan. Delete this post, or at the least have the integrity to admit that this analysis is fatally and irretrievably flawed.

The article makes reference to Pascal’s Wager. I’d like to point out environmentalists use a variation of this known as the ‘precautionary principle.’ It’s easy to spot once you understand the flawed logic. The danger of this logic is how it can be used to justify any outcome you prefer.

http://www.newworldencyclopedia.org/entry/Precautionary_principle

It’s commonly used as the reason we must do something about AGW. Once you understanding the unsound logic it’s easy to spot it in action, such as in this popular clip.

http://www.youtube.com/watch?v=zORv8wwiadQ

Also note how his decision matrix lacks probability and describes outcomes that can be just one of four extremes. It assumes we can do just the right thing and there’s no downside as a result (no blackswans). It appears to be risk management to the uninformed.

More importantly it’s anti-scientific. It shifts the burden to those who must prove a negative. The fallacy is known as the ‘argument from ignorance’ and it’s the opposite of the scientific method.

Matt,

When applying statistical tests to a physical system, the physical constraints should be taken into account.

Let me offer two analogies to which there is less ideological opposition:

– If my weight gain could be statistically described as a random walk, would that mean that whatever I eat or whatever I exercise has no relevance for my weight (since, according to the stat test, it is -or rather could be- all random)?

(see also http://ourchangingclimate.wordpress.com/2010/04/01/a-rooty-solution-to-my-weight-gain-problem/ )

– Consider a boat at sea. It has both a sail (being dependent on the wind – i.e. natural variation) and an engine (i.e. radiative forcing).

The skipper puts the engine on full blast and steers the boat from, say, Holland to England.

Would anyone wonder whether it’s just the wind that’s pushing the boat over the Canal (because its movement could be described as a random walk)?

With regards to climate, it is not all that different:

“So the process of the net incoming (downward solar energy minus the reflected) solar energy warming the system and the outgoing heat radiation from the warmer planet escaping to space goes on, until the two components of the energy are in balance. On an average sense, it is this radiation energy balance that provides a powerful constraint for the global average temperature of the planet.”

In other words, conservation of energy provides a constraint on the earth’s average temperature. A random warming trend would result in a countering radiative forcing to bring the radiation budget back in balance, unless the energy is coming from other parts of the climate system (e.g. the oceans or cryosphere), which isn’t the case since they are also warming up.

If your results go against conservation of energy, chances are that your physical interpretation is incorrect.

Quoting James Annan:(http://julesandjames.blogspot.nl/2012/11/polynomial-cointegration-tests-of.html )

“Fundamentally, the argument that a time series passes various statistical tests indicating consistency with a random walk, tells us nothing about whether it actually was generated by a random process. Especially when we happen to have very good reasons to believe that it was not… “

Hi Bart,

Is it possible that, on a small enough scale, the changes in direction of your boat are essentially random?

If you start with the assumption that someone must be steering the rudder, how far “off course” would they have to get before you reconsidered your assumption?

Might there be a problem in drawing a trend line over the boats changes in direction over the past 10 minutes and saying “see, he’s turned away from Boston and is headed to Lynn”?

I’ll admit I invited some of the criticism by using the term “random walk” term, which invites complaints that idealized random walks can wander off to infinity, and also brings with it the baggage of time series analysis. I did my best in the piece to evaluate the ways in which I wasn’t treating it as a pure random walk, but it probably would have been better to call the null hypothesis: YoY changes represent a structured random process with no trend.

As with all of my posts here (see the website motto or the manifesto page), I don’t so much model (models try to see if the data conforms to platonic distributional forms) as simulate. You are correct that my ability to simulate the data as structured, trendless movements doesn’t disprove GW2, but then, I said this in my piece. However, the analyses does show that the 131 years of data themselves provides no evidence to reject this hypothesis. All of the evidence to reject has come in the form of arguments which begin with the assumption of a guy at the rudder, then state that the data doesn’t show their is no guy there!

To be as clear as possible, many of the GW claims are highly extreme, in terms of estimated temperature changes (all of the extreme predictions made 10 years ago have failed) and how we should change our lives. Do the data provide strong enough evidence to support this?

At this point, none of the proponents of the consensus views on GW have been willing to state their degree of belief in the different GW claims.

None have pointed to an alternative data set that’s more convincing (or any other data set). None have posited an alternative method that doesn’t start with an assumption of a trend then require that it be disproved.

None of the proponents have addressed the points I raised about degrees of falsifiability, biases (including our tendency to see patterns and the anthropic principle), the problematic nature of Pascal’s Wager arguments, or the possibility that the greater change in temperature in the second half of the data is purely a result of greater variance in YoY temperature changes.

Then you had better address the issue of what forcing changed the variance (hint: this is typical of physical systems whose internal energy has increased and an increase in internal energy generally means a higher temperature in thermal systems). Waving the majic wand is not an aid to understanding.

What is happening here, BTW is that to defend your analysis you are having to add more and more caveats, a sure sign of problems with the original analysis.

1) Matt, now you are inventing new terminology. “[A] structured random process with no trend?” The term random walk is not only used in the context of independent increments. What you are simulating IS a random walk. The fact that the increments are correlated does not change its asymptotics (limsup = +infty, liminf = -infty), as you can guess from looking at your plot. And again, I am not just raising this objection because of the asymptotics- but because a random walk is the wrong kind of model to use and you are effectively cheating by using it.

*** I challenge you to explain your reasoning for choosing a random walk for your simulation, instead of something like linear regression or splines.

2) “As with all of my posts here (see the website motto or the manifesto page), I don’t so much model (models try to see if the data conforms to platonic distributional forms) as simulate. … [The] data … provides no evidence to reject this hypothesis.”

What you are simulating from is a model- a random walk model with non-independent increments sampled from an empirical cdf. Your hypothesis is that the data are well-represented by this model, and you failed to reject that hypothesis. Now, leaving aside the point above that this is the wrong kind of model and that makes it unduly difficult for the data to cause rejection of the hypothesis, as others have pointed out we could still reject this hypothesis using a different statistic. The statistic you chose was just the temperature difference at the end of 130 years. That statistic is not a sufficient statistic for this model (by far- it loses a huge amount of information).

*** I challenge you to consider some other statistics and provide p-values. For example, consider the integral of the square of the sample path, and report a p-value for the probability of observing a smaller square integral than the data.

(As an aside: I think you undervalue models. Of course they are platonic, but they serve a purpose for which we don’t yet have anything better. Language serves to represent things so that we can reason about them and communicate, but nobody would mistake a definition of a chair in a dictionary for an actual chair. Similarly, careful modeling allows us to reason about things and understand them. And understanding is vitally important, it is the difference between a scientist who actually knows stuff about the real climate and a hobbyist blindly writing code and producing graphs that have no connection whatsoever to the thing he thinks he is analyzing)

3) “At this point, none of the proponents of the consensus views on GW have been willing to state their degree of belief in the different GW claims.”

Okay, I’ll bite harder this time:

1-4 all greater than 99%. I would say 100*(1-epsilon)%, but I hesitate to be that certain about a topic I don’t know much about based solely on the expertise of others, and I guess the climate may be a sufficiently complex system so that it’s possible the experts have missed something.